Why Retrieval-Augmented Generation (RAG) is Essential for Trusting LLMs

Hi friends,

In my recent exploration of Large Language Models (LLMs), I encountered a situation that perfectly illustrates their limitations and the potential of Retrieval Augmented Generation (RAG) to overcome these challenges.

In today’s newsletter, I’ll briefly discuss the current problems with LLMs, why I have high hopes for RAG, and why I’m actively experimenting with them.

♠️ Quick heads up: my Black Friday promotion is ending soon. For a few more days, you can still get my two practical books, OpenAI Crash Course and Building PWAs with Supabase and React, at a 50% discount using the code BLACKFRIDAY_2024.

I wanted to make a local RAG application using Ollama with the local llama3.2:latest model.

When I asked Ollama about RAG, it told me that RAG is a role-based action game.

After I got back into my chair and clarified what I was talking about, Ollama still didn’t get the memo.

It “corrected” itself by telling me that RAG is actually a reality-augmented generation, text-based game.

I copied part of the chat for your amusement:

This experience highlighted why skepticism towards LLMs is warranted and how RAG can enhance their reliability.

The Problem with LLMs

LLMs are powerful tools but often provide answers based on statistical likelihood rather than verified information. This can lead to inaccuracies, especially when the model doesn’t have access to the necessary resources or context.

Here are some key issues:

Lack of Source Verification: LLMs typically don’t cite sources, making it difficult to verify the accuracy of their responses.

Statistical Guessing: They tend to offer the most statistically probable answer, even if it’s incorrect.

Inability to Admit Uncertainty: LLMs rarely acknowledge when they don’t know something, which can lead to misleading information. Remember the memes when people convinced it to say that 2+2 = 5 is correct?

The Solution: Incorporating RAG

RAG addresses these issues by integrating a retrieval system that sources information from a predefined dataset. This ensures that the answers are based on actual data rather than assumptions. Here’s how RAG can improve LLMs:

Source-Based Responses: RAG allows LLMs to cite where they found the information, enhancing trust and reliability.

Contextual Accuracy: By using a specific dataset, RAG ensures that responses are relevant and accurate.

Professional Application: RAG can provide precise answers based on existing documents or codebases in legal teams or software development environments.

Practical Applications of RAG

Would you rely on a legal team backed by ChatGPT?

Neither would I.

Legal teams have many documents. If you need to find specific information from years ago, a RAG-enhanced LLM can search through the documents and provide an accurate answer, citing the source.

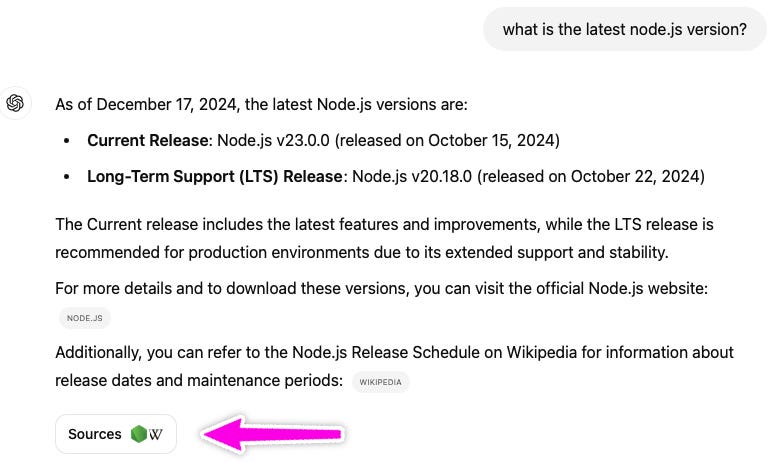

We can already see these approaches implemented by major LLMs, but for publicly available information:

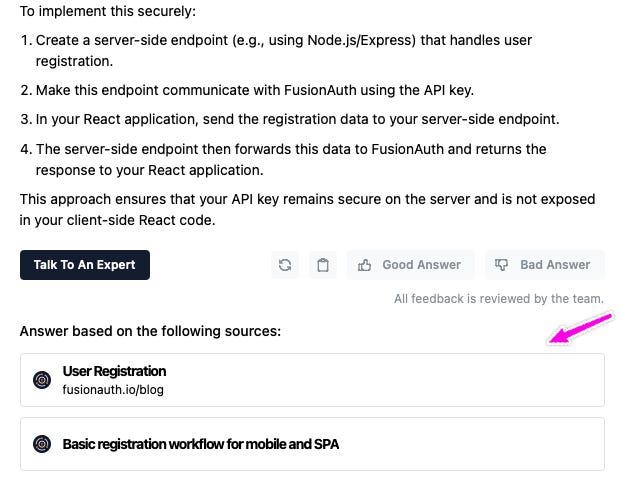

In software development, RAG-powered documentation is bliss.

You don’t have to cut through pages and pages of docs anymore. Simply ask the chatbot and get a correct answer, citing sources:

Even wilder, imagine not having to update static user-facing documentation because your library’s use cases can already be found in the source code.

Key Benefits of RAG

Enhanced Trust: By providing source-based answers, RAG builds trust in LLMs.

Faster Adaptation: With reliable information, users can quickly adapt LLMs into their workflows.

Maintained Accuracy: RAG ensures that responses remain accurate over time, even as context changes.

Looking Forward

I see RAGs as the stepping stone to fully trusting LLM responses and not needing second thoughts or Googling a bit more, just in case the LLM hallucinated…

As we continue to develop and refine these technologies, the potential for RAG to transform how we interact with LLMs is immense.

By ensuring that responses are accurate and verifiable, RAG can help us rise above the limitations of current LLMs and unlock new possibilities for their use in professional and technical environments.

Imagine one day you say with confidence: I trust the AI.

📰 Weekly shoutout

📣 Share

There’s no easier way to help this newsletter grow than by sharing it with the world. If you liked it, found something helpful, or you know someone who knows someone to whom this could be helpful, share it:

🏆 Subscribe

Actually, there’s one easier thing you can do to grow and help grow: subscribe to this newsletter. I’ll keep putting in the work and distilling what I learn/learned as a software engineer/consultant. Simply sign up here:

Thanks for the shoutout, Akos. And for some extra reading on RAGs here on Substack you can refer to Mostly Harmless Ideas, by Alejandro Piad. He has a series of posts diving a bit deeper and some step by step tutorials.